Discover the potential of ReLU in Machine Learning. Dive into the world of Rectified Linear Units and explore their significance in artificial neural networks. Get insights, FAQs, and expert knowledge in this comprehensive guide.

Artificial intelligence and machine learning have revolutionized the way we approach problem-solving and data analysis. In this ever-evolving landscape, understanding the core concepts and techniques is essential. One such technique that has gained immense popularity in recent years is Rectified Linear Units or ReLU in machine learning.

In this article, we will take an in-depth journey into the world of ReLU in Machine Learning. We will unravel its significance, applications, advantages, and potential challenges. So, fasten your seatbelts, and let’s explore the power of ReLU.

Table of Contents

|

Heading |

|

Understanding ReLU |

|

The Birth of Rectified Linear Units |

|

How ReLU Works |

|

Advantages of ReLU |

|

Applications of ReLU |

|

Challenges in ReLU Implementation |

|

ReLU vs. Sigmoid and Tanh |

|

Training Deep Neural Networks with ReLU |

|

Fine-tuning ReLU |

|

Hyperparameter Tuning for ReLU |

|

Overcoming the Vanishing Gradient Problem with ReLU |

|

ReLU in Convolutional Neural Networks |

|

Optimizing ReLU with Leaky ReLU |

|

Exponential Linear Unit (ELU) |

|

Parametric ReLU (PReLU) |

|

Swish Activation Function |

|

Comparing ReLU Variants |

|

Industry Impact of ReLU |

|

Success Stories with ReLU |

|

FAQs about ReLU in Machine Learning |

|

Conclusion |

Understanding ReLU

ReLU, short for Rectified Linear Unit, is an activation function used in artificial neural networks. It serves as a critical component in deep learning models, enhancing their ability to learn and make predictions. ReLU’s simplicity and effectiveness have made it a go-to choice for many machine learning practitioners.

The Birth of Rectified Linear Units

The concept of ReLU dates back to the early 2000s, but it gained widespread recognition around 2010. The credit for popularizing ReLU goes to Professor Geoffrey Hinton and his team, who demonstrated its effectiveness in training deep neural networks. Before ReLU, activation functions like sigmoid and hyperbolic tangent (tanh) were commonly used, but they had limitations that ReLU successfully overcame.

How ReLU Works

ReLU is a piecewise linear function that activates when the input is positive and remains dormant when negative. Mathematically, it can be defined as:

scss

Copy code

f(x) = max(0, x)

Here, x represents the input to the function. If x is positive, the output is equal to x. If x is negative, the output is zero. This simple rule is at the heart of ReLU’s effectiveness.

Advantages of ReLU

1. Simplicity and Efficiency

ReLU’s simplicity in computation makes it computationally efficient compared to other activation functions.

2. Avoiding Vanishing Gradient

ReLU helps mitigate the vanishing gradient problem, allowing for more profound and stable training of deep neural networks.

3. Sparsity

ReLU encourages sparsity in neural networks, as some units remain inactive, leading to more efficient model representations.

Applications of ReLU

ReLU finds applications in various domains, including:

- Image and speech recognition

- Natural language processing

- Autonomous driving

- Game development

- Healthcare diagnostics

Its versatility and effectiveness make it a valuable tool in machine learning and AI.

Challenges in ReLU Implementation

While ReLU offers numerous advantages, it also poses some challenges, such as the “dying ReLU” problem and sensitivity to initialization. However, researchers have developed variants like Leaky ReLU, Parametric ReLU, and ELU to address these issues.

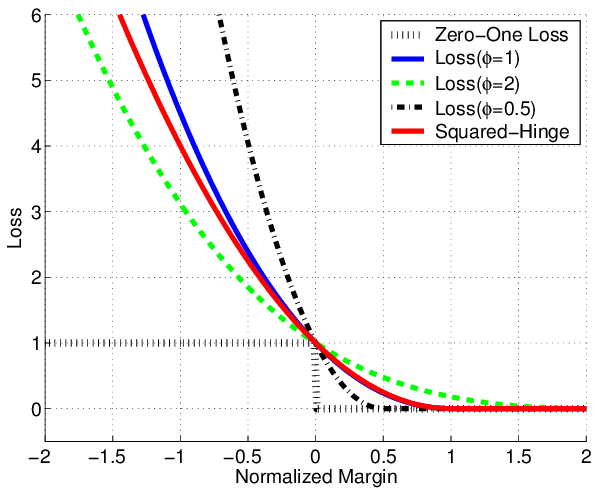

ReLU vs. Sigmoid and Tanh

Comparing ReLU to traditional activation functions like sigmoid and tanh reveals its superiority in training deep neural networks. ReLU’s non-linearity and avoidance of saturation make it a game-changer.

Training Deep Neural Networks with ReLU

Training deep neural networks with ReLU involves careful selection of hyperparameters, weight initialization, and regularizations. Understanding these aspects is crucial for achieving optimal results.

Fine-tuning ReLU

Fine-tuning ReLU parameters, such as the slope of the negative region in Leaky ReLU, can further enhance the performance of deep learning models.

Hyperparameter Tuning for ReLU

Hyperparameter tuning plays a pivotal role in maximizing the benefits of ReLU. Adjusting learning rates, batch sizes, and other parameters can significantly impact the model’s convergence.

Overcoming the Vanishing Gradient Problem with ReLU

The vanishing gradient problem can hinder deep network training. ReLU’s inherent ability to mitigate this issue is a key reason behind its widespread adoption.

ReLU in Convolutional Neural Networks

Convolutional Neural Networks (CNNs) heavily rely on ReLU for image and pattern recognition tasks. Its application in CNNs has led to breakthroughs in computer vision.

Optimizing ReLU with Leaky ReLU

Leaky ReLU, an improved version of ReLU, addresses the “dying ReLU” problem by allowing a small gradient for negative inputs. This modification makes training more robust.

Exponential Linear Unit (ELU)

ELU is another activation function that competes with ReLU. It offers smoothness and robustness to noise in the data, making it an attractive alternative.

Parametric ReLU (PReLU)

PReLU introduces a learnable parameter to the ReLU function, enhancing its adaptability to different data distributions.

Swish Activation Function

Swish is a novel activation function that combines elements of sigmoid and ReLU. It has shown promising results in various deep learning applications.

Comparing ReLU Variants

Choosing the right variant of ReLU depends on the specific task and dataset. Comparing ReLU variants can help identify the most suitable one.

Industry Impact of ReLU

ReLU has had a profound impact on various industries. Its contributions to image recognition, natural language processing, and healthcare have paved the way for innovative solutions and advancements.

Success Stories with ReLU

Numerous success stories demonstrate the transformative potential of ReLU. From self-driving cars to medical diagnostics, ReLU has played a pivotal role in shaping the future of technology.

FAQs about ReLU in Machine Learning

Q: What is the main advantage of ReLU over sigmoid and tanh?

ReLU’s main advantage is its avoidance of saturation, which allows for more efficient training of deep neural networks.

Q: Are there any drawbacks to using ReLU?

While ReLU offers many benefits, it can suffer from the “dying ReLU” problem, where some units become inactive during training.

Q: Can I use ReLU in any type of neural network?

Yes, ReLU is compatible with various neural network architectures, including feedforward, convolutional, and recurrent networks.

Q: What are some common variants of ReLU?

Common variants include Leaky ReLU, Parametric ReLU (PReLU), and Exponential Linear Unit (ELU).

Q: How can I fine-tune ReLU parameters for my model?

Fine-tuning ReLU parameters often involves adjusting the slope of the negative region in variants like Leaky ReLU.

Q: What is the industry impact of ReLU in machine learning?

ReLU has significantly impacted industries like computer vision, natural language processing, and healthcare, enabling breakthroughs in technology.

Conclusion

In the dynamic field of machine learning, understanding ReLU in Machine Learning is a valuable asset. Its simplicity, efficiency, and effectiveness make it a cornerstone of deep learning. As you embark on your journey into the world of artificial intelligence, remember that ReLU is a powerful tool in your toolkit. Embrace it, experiment with its variants, and unlock the full potential of neural networks.

============================================